.jpg)

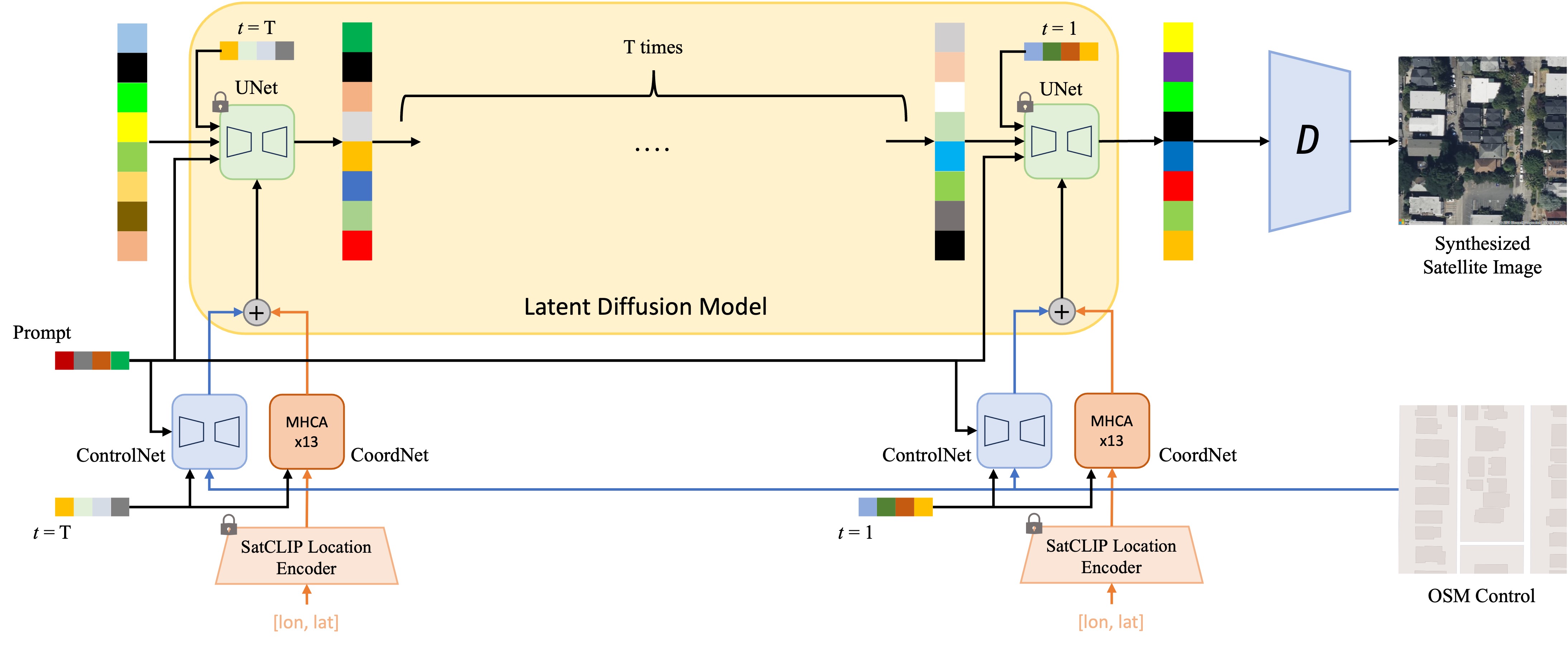

We present GeoSynth, a model for synthesizing satellite images with global style and image-driven layout control. The global style control is via textual prompts or geographic location. These enable the specification of scene semantics or regional appearance respectively, and can be used together. We train our model on a large dataset of paired satellite imagery, with automatically generated captions, and OpenStreetMap data. We evaluate various combinations of control inputs, including different types of layout controls. Results demonstrate that our model can generate diverse, high-quality images and exhibits excellent zero-shot generalization.

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

import torch

from PIL import Image

img = Image.open("osm_tile_18_42048_101323.jpeg")

controlnet = ControlNetModel.from_pretrained("MVRL/GeoSynth-OSM")

pipe = StableDiffusionControlNetPipeline.from_pretrained("stabilityai/stable-diffusion-2-1-base", controlnet=controlnet)

pipe = pipe.to("cuda:0")

# generate image

generator = torch.manual_seed(10345340)

image = pipe(

"Satellite image features a city neighborhood",

generator=generator,

image=img,

).images[0]

image.save("generated_city.jpg").jpg)

@inproceedings{sastry2024geosynth,

title={GeoSynth: Contextually-Aware High-Resolution Satellite Image Synthesis},

author={Sastry, Srikumar and Khanal, Subash and Dhakal, Aayush and Jacobs, Nathan},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={460--470},

year={2024}

}